- cross-posted to:

- technology@beehaw.org

- cross-posted to:

- technology@beehaw.org

A new study published in Nature by University of Cambridge researchers just dropped a pixelated bomb on the entire Ultra-HD market, but as anyone with myopia can tell you, if you take your glasses off, even SD still looks pretty good :)

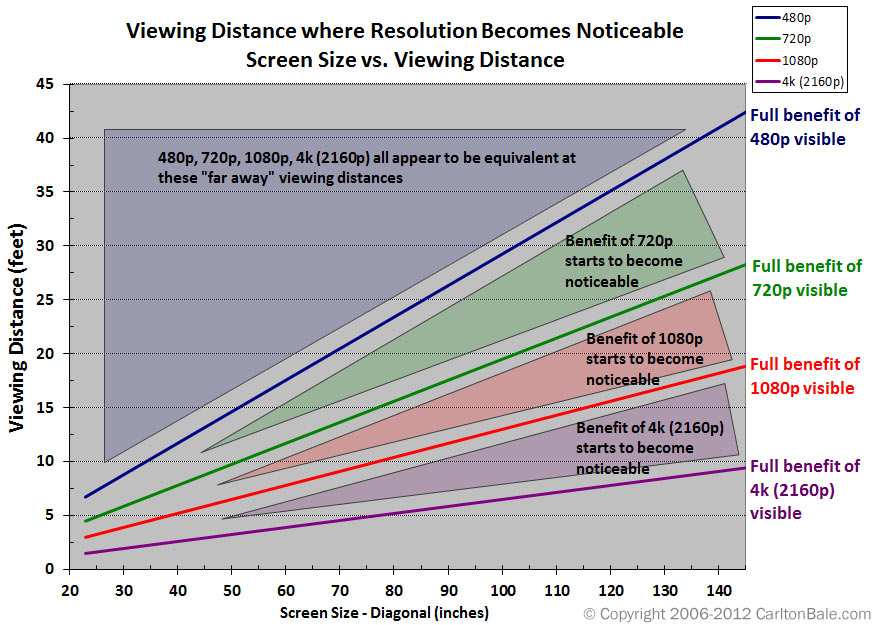

The study used a 44 inch TV at 2.5m. The most commonly used calculator for minimum TV to distance says that at 2.5m the TV should be a least 60 inches.

My own informal tests at home with a 65 inch TV looking at 1080 versus 4K Remux of the same movie seems to go along with the distance calculator. At the appropriate distance or nearer I can see a difference if I am viewing critically (as opposed to casually). Beyond a certain distance the difference is not apparent.

Exactly. This title is just clickbait.

The actual study’s title is “Resolution limit of the eye — how many pixels can we see?”.

Shhh – the ISPs need a reason to sell bigger data plans. Please think of the ISPs…

Hard disagree. 4K is stunning, especially Samsung’s Neo-QLED. I cannot yet tell a difference between 4K and 8K, though.

i suspect screen size would make the difference. you won’t notice 4K or 8K on small screens.

It’s the ratio of screen size to distance from the screen. But typically you sit further from larger screens, so there’s an optimization problem in there somewhere.

Honestly after using the steam deck (800p) I’m starting to wonder if res matters that much. Like I can definitely see the difference, but it’s not that big a deal? All I feel like I got out of my 4k monitor is lower frame rates.

Highly depends on screen size and viewing distance, but nothing reasonable for a normal home probably ever needs more than 8k for a high end setup, and 4K for most cases.

Contrast ratio/HDR and per-pixel backlighting type technology is where the real magic is happening.

Depends on your eyes quite a bit, too. If I’m sitting more than 15’ back from a 55" screen, 1080p is just fine. Put on my distance glasses and I might be able to tell the difference with 4K.

This is so much bullshit. 4K does make a difference, specially if playing console games on a large TV (65" and up).

Console games that all run at <720p getting upscaled to hell and back. We have come so far since the PS3 where games ran at <720p, but without upscaling. lol

If you’re sitting 3’ from the screen, sure. Even 8K is better, if your hardware can drive it.

4k is way better than 1080p, it’s not even a question. You can see that shit from a mile away. 8k is only better if your TV is comically large.

I can immediately tell when a game is running at 1080p on my 2K monitor (yeah, I’m not interested in 4K over higher refresh rate, so I’m picking the middle ground.)

Its blatantly obvious when everything suddenly looks muddy and washed together.

I think that’s relevant to the discussion though. Most people sit like two feet from their gaming monitor and lean forward in their chair to make the character go faster.

But most people put a big TV on the other side of a boring white room, with a bare white ikea coffee table in between you and it, and I bet it doesn’t matter as much.

I bet the closest people ever are to their TV is when they’re at the store buying it…

I think you overestimate the quality of many humans’ eyes. Many people walk around with slightly bad vision no problem. Many older folks have bad vision even corrected. I cannot distinguish between 1080 and 4k in the majority of circumstances. Stick me in front of a computer and I can notice, but tvs and computers are at wildly different distances.

And the size of most people’s TV versus how far away they are.

Yeah a lot of people have massive TV’s if your into sport but most people have more reasonable sized TV’s.

I used to have 20/10 vision, this 20/20 BS my cataract surgeon says I have now sucks.

Thats what you humans get for having the eyes from fish

HDR 1080p is what most people can live with.

4k is perfectly fine for like 99% of people.

4k is overkill for 99% of people

640K of RAM is all anybody will ever need.

1920x1080 is plenty, if the screen is under 50" and the viewer is more than 10’ away and they have 20/20 vision.

Sony Black Trinitron was peak TV.

Kind of a tangent, but properly encoded 1080p video with a decent bitrate actually looks pretty damn good.

A big problem is that we’ve gotten so used to streaming services delivering visual slop, like YouTube’s 1080p option which is basically just upscaled 720p and can even look as bad as 480p.

This. The visual difference of good vs bad 1080p is bigger than between good 1080p and good 4k. I will die on this hill. And Youtube’s 1080p is garbage on purpose so they get you to buy premium to unlock good 1080p. Assholes

I can still find 480p videos from when YouTube first started that rival the quality of the compressed crap “1080p” we get from YouTube today. It’s outrageous.

Sadly most of those older YouTube videos have been run through multiple re-comoressions and look so much worse than they did at upload. It’s a major bummer.

Yeah I’d way rather have higher bitrate 1080 than 4k. Seeing striping in big dark or light spots on the screen is infuriating

For most streaming? Yeah.

Give me a good 4k Blu-ray though. High bitrate 4k

I mean yeah I’ll take higher quality. I’d just rather have less lossy compression than higher resolution

i’d rather have proper 4k.

HEVC is damn efficient. I don’t even bother with HD because a 4K HDR encode around 5-10GB looks really good and streams well for my remote users.

I stream YouTube at 360p. Really don’t need much for that kind of video.

360p is awful, 720p is the sweet spot IMO.

Sure but, hear me out, imagine having most of your project sourcecode on the screen at the same time without having to line-wrap.

I’ve been using “cheap” 43" 4k TVs as my main monitor for over a decade now. I used to go purely with Hisense, they have great colour and PC text clarity, and I could get them most places for $250 CAD. But this year’s model they switched from RGB subpixel layout to BGR, which is tricky to get working cleanly on a computer, even when forcing a BGR layout in the OS. One trick is to just flip the TV upside down (yes it actually works) but it just made the whole physical setup awkward. I went with a Sony recently for significantly more, but the picture quality is fantastic.

And then there’s the dev that still insists on limiting lines to 80 chars & you have all that blank space to the side & have to scroll forever per file, sigh….

Split screen yo

I can pretty confidently say that 4k is noticeable if you’re sitting close to a big tv. I don’t know that 8k would ever really be noticeable, unless the screen is strapped to your face, a la VR. For most cases, 1080p is fine, and there are other factors that start to matter way more than resolution after HD. Bit-rate, compression type, dynamic range, etc.

Would be a more useful graph if the y axis cut off at 10, less than a quarter of what it plots.

Not sure what universe where discussing the merits of 480p at 45 ft is relevant, but it ain’t this one. If I’m sitting 8 ft away from my TV, I will notice the difference if my screen is over 60 inches, which is where a vast majority of consumers operate.

People are legit sitting 15+ feet away and thinking a 55 inch TV is good enough… Optimal viewing angles for most reasonably sized rooms require a 100+ inch TV and 4k or better.

So, a 55-inch TV, which is pretty much the smallest 4k TV you could get when they were new, has benefits over 1080p at a distance of 7.5 feet… how far away do people watch their TVs from? Am I weird?

And at the size of computer monitors, for the distance they are from your face, they would always have full benefit on this chart. And even working into 8k a decent amount.

And that’s only for people with typical vision, for people with above-average acuity, the benefits would start further away.

But yeah, for VR for sure, since having an 8k screen there would directly determine how far away a 4k flat screen can be properly re-created. If your headset is only 4k, a 4k flat screen in VR is only worth it when it takes up most of your field of view. That’s how I have mine set up, but I would imagine most people would prefer it to be half the size or twice the distance away, or a combination.

So 8k screens in VR will be very relevant for augmented reality, since performance costs there are pretty low anyway. And still convey benefits if you are running actual VR games at half the physical panel resolution due to performance demand being too high otherwise. You get some relatively free upscaling then. Won’t look as good as native 8k, but benefits a bit anyway.

There is also fixed and dynamic foveated rendering to think about, with an 8k screen, even running only 10% of it at that resolution and 20% at 4k, 30% at 1080p, and the remaining 40% at 540p, even with the overhead of so many foveation steps, you’ll get a notable reduction in performance cost. Fixed foveated would likely need to lean higher towards bigger percentages of higher res, but has the performance advantage of not having to move around at all from frame to frame. Can benefit from more pre-planning and optimization.

A lot of us mount a TV on the wall and watch from a couch across the room.

And you get a TV small enough that it doesn’t suit that purpose? Looks like 75 inch to 85 inch is what would suit that use case. Big, but still common enough.

I’ve got a LCD 55" TV and a 14" laptop. Ok the couch, the TV screen looks to me about as big as the laptop screen on my belly/lap, and I’ve got perfect vision; on the laptop I can clearly see the difference between 4k and FULL HD, on the TV, not so much.

I think TV screens aren’t as good as PC ones, but also the TVs’ image processors turn the 1080p files into better images than what computers do.

Hmm, I suppose quality of TV might matter. Not to mention actually going through the settings and making sure it isn’t doing anything to process the signal. And also not streaming compressed crap to it. I do visit other peoples houses sometimes and definitely wouldn’t know they were using a 4k screen to watch what they are watching.

But I am assuming actually displaying 4k content to be part of the testing parameters.

Seriously, articles like this are just clickbait.

They also ignore all sorts of usecases.

Like for a desktop monitor, 4k is extremely noticeable vs even 1440P or 1080P/2k

Unless you’re sitting very far away, the sharpness of text and therefore amount of readable information you can fit on the screen changes dramatically.

The article was about TVs, not computer monitors. Most people don’t sit nearly as close to a TV as they do a monitor.

Oh absolutely, but even TVs are used in different contexts.

Like the thing about text applies to console games, applies to menus, applies to certain types of high detail media etc.

Complete bullshit articles. The same thing happened when 720p became 1080p. So many echos of “oh you won’t see the difference unless the screen is huge”… like no, you can see the difference on a tiny screen.

We’ll have these same bullshit arguments when 8k becomes the standard, and for every large upgrade from there.

Good to know that pretty much anything looks fine on my TV, at typical viewing distances.

There’s a giant TV at my gym that is mounted right in front of some of the equipment, so my face is inches away. It must have some insane resolution because everything is still as sharp as a standard LCD panel.

How many feet away is a computer monitor?

Or a 2-4 person home theater distance that has good fov fill?

The counterpoint is that if you’re sitting that close to a big TV, it’s going to fill your field of view to an uncomfortable degree.

4k and higher is for small screens close up (desktop monitor), or very large screens in dedicated home theater spaces. The kind that would only fit in a McMansion, anyway.

This is why I still use 768p as my preferred resolution, despite having displays that can go much higher. I hate that all TVs now are trying to go as big as possible, when it’s just artificially inflating the price for no real benefit. I also hate that modern displays aren’t as dynamic as what CRTs were. CRTs can handle pretty much any resolution you throw at them but modern TVs and monitors freak out if you don’t use an exact resolution, causing them to either have input lag because the display has to upscale the image or a potential performance hit if the display forces the connected device to handle the upscaling.